Story at a glance:

- Real-time rendering allows architects to analyze, process, and publish detailed 3D images and scenes instantly.

- Real-time renderings help design teams make informed decisions, saves time and resources, improves cost control, and betters clients’ understanding of a project.

- Enscape is a real-time rendering and virtual reality tool designed for architects that plugs directly into the modeling software a firm already uses.

Two-dimensional blueprints and three-dimensional models—both physical and digital—have long served architects well, but they aren’t always ideal for presenting design concepts to clients with a limited understanding of the technical aspects of the AEC industry.

As a result architects are increasingly turning to real-time rendering to better present their ideas through the creation of highly detailed, immersive, and interactive 3D representations.

Let’s explore the benefits of real-time renderings and more.

What is Real-Time Rendering?

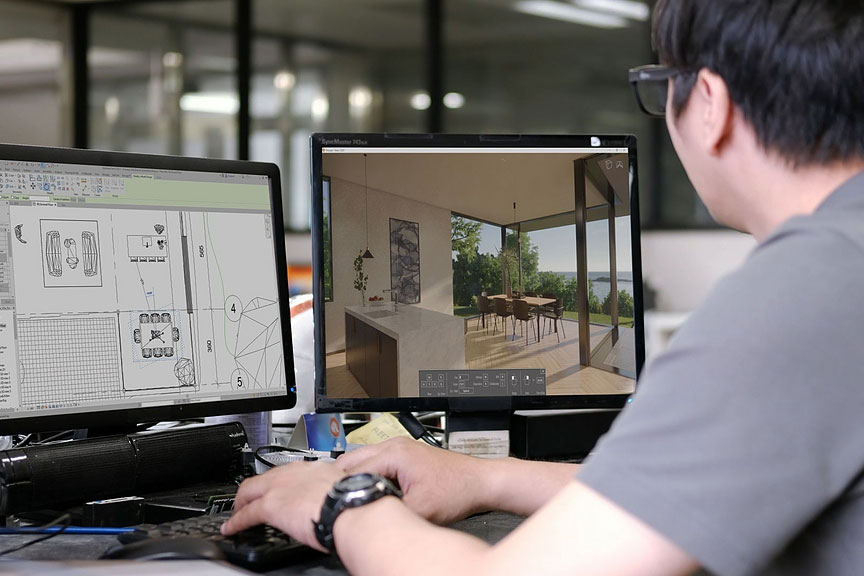

Real-time rendering is a sub-field of computer graphics that lets the user render extremely detailed, immersive scenes extremely quickly and is used in everything from video games and film to architecture and design. Photo courtesy of Enscape

Real-time rendering, or real-time visualization, is a sub-field of computer graphics that is best described as the analyzing, processing, and publishing of data in real time. Real-time visualization allows detailed 3D images and animations to be rendered extremely quickly—under 33 milliseconds—and operates in a continuous feedback loop that responds to user input instantaneously. This is achieved through the efficient manipulation of key geometric data and expert replication of physical properties like texture, color, light, and shadow.

Technologically real-time rendering is nothing new; these renderings have been a staple in the video-game industry for decades and are key to immersive gaming experiences. Real-time rendering has also found a place in filmmaking as a visual-effects tool and has even helped spur advancements in virtual reality.

It is only fairly recently, however, that architects and engineers have begun using real-time rendering software in place of industry-standard pre-rendering programs to better present their ideas and designs to clients.

Pre-Rendering vs Real-Time Rendering

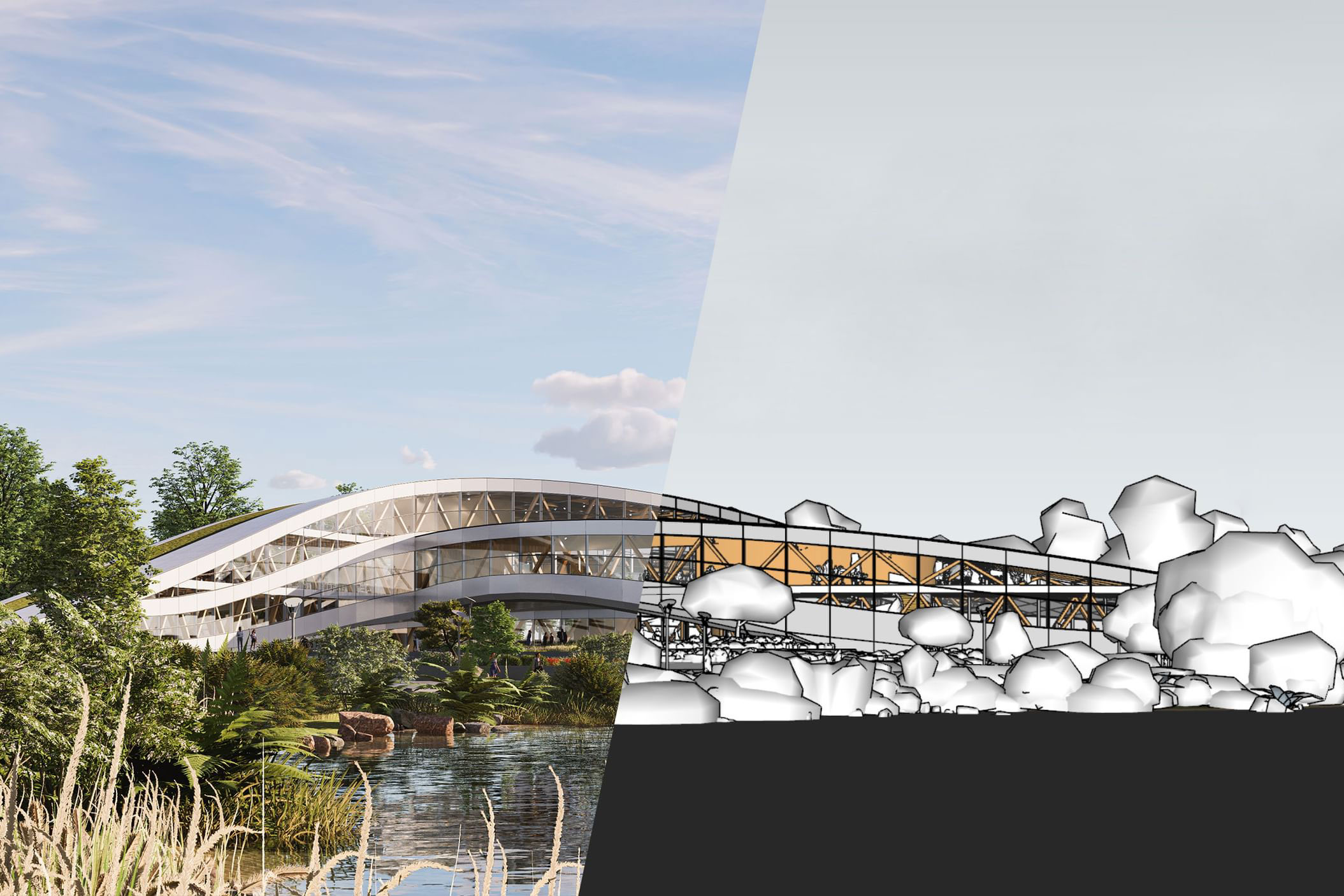

The most significant differences between pre- and real-time rendering are their speed and amount of interactivity. Pre-rendering refers to the creation of static images or videos in advance and saving them for further alteration. This method allows for the creation of a highly polished finished product but offers very little by way of interactivity and typically takes anywhere from minutes to hours to render.

Real-time rendering, on the other hand, allows the user to create highly interactive, easily manipulated 3D simulations that render in less than a second. Architects and their clients can then “walk through” spaces in real time and explore every single corner of a design as it would appear in context. “Real-time visualization has made the process of illustrating architectural designs easier and faster,” Dinnie Musilhat, part of the content team at Enscape, previously wrote for gb&dPRO. “It translates 3D models into something tangible and understandable for people with limited knowledge of technical architectural aspects.”

How Does Real-Time Rendering Work?

Real-time rendering is founded on what is referred to as the graphics rendering pipeline, a computer graphics framework that identifies the necessary steps for turning a three-dimensional scene or model into a two-dimensional representation on a screen. This pipeline can be divided into three core stages: application, geometry, and rasterization or ray tracing.

Application

Real-time rendering’s application stage prepares graphics data and produces rendering primitives for the following geometry stage. Rendering courtesy of Enscape

As the first stage in real-time rendering, the application stage is responsible for generating scenes, or 3D settings that are then drawn to a 2D display. Because this process is executed by software run by the CPU, the developer has full control over what happens during the application stage and can modify it to improve performance.

Common processing operations performed by the application stage include speed-up techniques, collision detection, animation and force feedback, as well as the handling of user input. This application stage is also responsible for preparing graphics data for the next stage by way of geometry morphing, animation of 3D models, animation via transforms, and texture animations.

The most important part of the application stage, however, is the production of rendering primitives—or the simplest geometric shapes the system can handle (e.g. lines, points, and triangles) and that might eventually end up on screen—based on scene information and feeding said primitives into the subsequent geometry stage.

Geometry

The geometry stage of real-time rendering is the most complex and is responsible for computing what to draw, how to draw it, and where to draw it. Image courtesy of Enscape

The second stage of real-time rendering, the geometry stage, is responsible for manipulating polygons and vertices to compute what, how, and where to draw. This stage encompasses multiple sub-stages: model and view transform, vertex shading, projection, clipping, and screen mapping.

Model & View Transform

Before they can be sent to the screen models must first be transformed into several different coordinate systems or spaces. Once a model has been created it is said to exist within its own model space, which essentially means it has yet to be transformed. Each model is then associated with its own model transform, a process that allows the model to be positioned and oriented.

During model transform the vertices and normals of the model are transformed, which in turn moves the model from its model coordinates to world space. All models exist in the same world space once they have been transformed with their respective model transforms and it is in this world space that the second stage of transformation—the view transform—happens.

View transform is applied to the camera—which also has a location and direction in world space—as well as the models themselves. The purpose of the view transform is to place the camera at an origin and aim it in the direction of the negative z-axis, with this new space referred to as the camera or eye space. Only those models within the eye space at any given point in time are rendered.

Vertex Shading

Vertex shading is the second geometry substage and is responsible for rendering the actual appearance of objects, including their material, texture, and shading. Of these, shading—or the effect of light on an object’s appearance—is arguably the most important to producing a realistic scene and is accomplished by using the material data stored at each vertex on a model to compute shading equations.

Most of these shading computations are performed during the geometry stage in world space, but some may be performed later on during the final rasterization or ray tracing stage. All vertex shading results—including vectors, colors, texture coordinates, et cetera—are then sent to the rasterization or ray tracing stage to be interpolated.

Projection

Once shading is complete real-time rendering programs perform projection—a process that transforms the view volume into a unit cube referred to as the canonical view volume. This sub-stage is ultimately responsible for turning three-dimensional objects into two-dimensional projections. Two types of projection methods are used in real-time rendering: orthographic and perspective.

Orthographic projections transform the rectangular view volume characteristic of orthographic viewing into the unit cube via a combination of scaling and translation. Using this method, parallel lines remain parallel even after the transformation.

Perspective projection, on the other hand, more closely mimics human sight by ensuring that, as the distance between the camera and model increases, the model appears to grow smaller and smaller—in this way, parallel lines may actually converge at the horizon. Rather than a rectangular box, the view volume of perspective viewing appears as a truncated pyramid with a rectangular base.

Clipping

After projection real-time rendering systems use the canonical view volume to determine which primitives need to be passed on to the next stage, as only those primitives that exist wholly or partially within the view volume need to be rendered. Primitives that are already entirely within the view volume are passed on as is, but partial primitives require clipping before moving on to the final rasterization or ray tracing stage.

Any vertices outside of the view volume, for example, must be clipped against the view volume, which requires the old vertices be replaced by new ones that are located at the intersection of their respective primitives and the view volume. This process is made relatively simple by the projection matrix from the previous stage, as it ensures all transformed primitives will be clipped against the unit cube in a consistent manner.

Screen Mapping

Only those clipped primitives are passed on to the screen mapping sub-stage. Screen mapping is responsible for converting the still-3D coordinates of clipped primitives into 2D coordinates. Each primitive’s x- and y-coordinates are transformed to form screen coordinates, with the z-coordinate being unaffected by the screen mapping process. Once mapped, these new coordinates are moved along to the rasterization or ray tracing stage.

Rasterization or Ray Tracing

Enscape uses ray tracing to better simulate reflections, soft shadows, and other optical effects in its real-time renders. Rendering courtesy of Enscape

The last stage of conventional real-time rendering is rasterization—a process that applies color to the graphics elements and turns them into pixels that are then displayed on screen. Rasterization is an object-based approach to rendering scenes, which means that all objects are painted with color ahead of time, after which point logic is applied to only show those pixels that are closest to the eye or camera.

There is, however, a more modern alternative to rasterization referred to as ray tracing, which colors each pixel before identifying them with objects. Ray tracing is capable of simulating a variety of optical effects—refraction, reflections, soft shadows, depth of field, etc.—with extreme accuracy, making for a more realistic and immersive final render. The downside of ray tracing is that it is slower than rasterization and typically requires a more advanced graphics card than what most firms already use.

Benefits of Real-Time Rendering in Architecture

Real-time renderings are an extremely useful tool in the modern architect’s toolkit. Here are some benefits.

Better Client Understanding

KeurK used real-time rendering and virtual reality to help their client—the European Medicines Agency—better understand the design of their new headquarters. Rendering courtesy of Enscape

Blueprints and 3D models have their place in architecture but they aren’t always the best tools for conveying information to clients who may not have the same technical understanding of the process. Size and scale, for instance, can be difficult to grasp when looking at a drawing or a static model on a screen, but real-time rendering remedies this by letting the client move freely through a to-scale representation of the space.

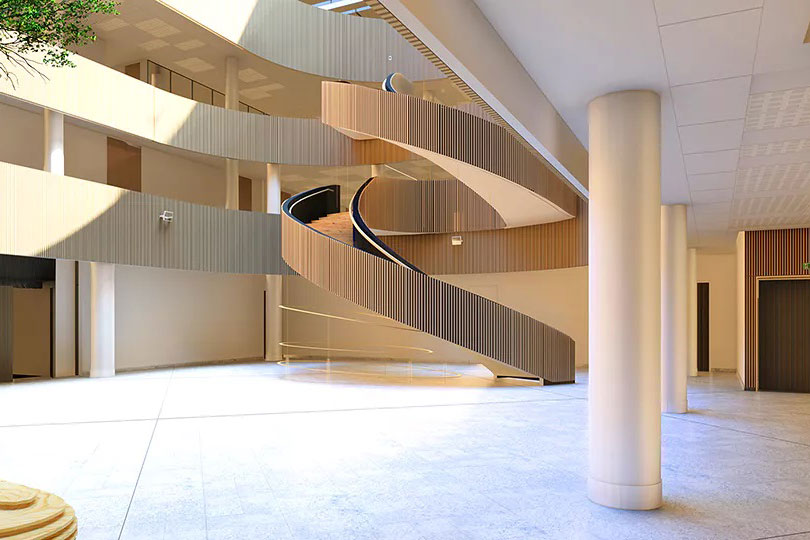

This is especially true if firms use real-time visualization in conjunction with VR technology, as it allows clients to physically walk through a 1:1 representation of the finished product and get an intimate feel for the space itself. When French architectural firm KeurK designed the new headquarters for the European Medicines Agency, it was this very same line of thinking that led them to use real-time rendering and VR to present their ideas to the client

“Using VR allowed us to make an impression. It helped us show small details and helped people who weren’t versed in construction understand it better,” Olivier Riatuté, founder of KeurK, previously told gb&dPRO. “For instance, we could show just how monumental the staircase would look in the atrium.” Using real-time rendering to better a client’s understanding of the design ultimately makes them more confident in both their personal and the team’s choices.

Collaboration & Informed Decision-Making

Highly detailed, immersive, and accurate simulations rendered in real time help facilitate informed decision making. Rendering courtesy of Enscape

Highly detailed, realistic, and immersive real-time renders also make it easier for design teams and their clients to communicate and make informed decisions regarding certain design considerations—like layout, placement of daylighting solutions, furniture ergonomics, et cetera—that may not be possible from a 2D blueprint or 3D model alone.

When Viewport Studio, an award-winning architecture and design studio based in London and Singapore, was tasked with designing Spaceport America—the world’s first purpose-built commercial spaceport—they used real-time rendering to help make essential design decisions. Real-time visualization of lighting conditions, for example, were used to evaluate the sunlight that would reflect from windows and monitors in the control room, leading to the design team choosing to implement curtains and opaque glass in the space.

Saves Time & Resources

Real-time rendering helps save projects time and resources. Rendering courtesy of Enscape

Perhaps the most significant benefit of real-time rendering is that it helps save time and project resources. “Real-time visualization tools save time and resources in two ways: reducing the resources needed to develop a design on the front end while reducing time lost to design changes on the back end,” Dan Monaghan, business leader for Enscape’s American market, previously wrote for gb&dPRO. “This is since it is quick and easy to make design changes, create visualizations, and incorporate client feedback.”

Improved Cost Control

Real-time visualization programs can help improve cost control by reducing communication and coordination issues, leading to fewer changes throughout the construction process. Rendering courtesy of Enscape

Using real-time rendering to save time and resources has the added benefit of improving a project’s overall cost control. “Architectural visualizations can be a key aid throughout the design process for resolving coordination issues across the various disciplines, resulting in designs that are more accurate and refined, ultimately leading to fewer changes in the construction process, where the budget impact is the greatest,” Roderick Bates, head of integrated practice at Enscape, previously wrote for gb&dPRO.

Challenges of Real-Time Rendering

Real-time rendering can be difficult to master and often comes with expensive equipment requirements. Rendering courtesy of Enscape

Real-time rendering can be incredibly beneficial to architects and engineers, but it isn’t without its challenges.

Learning Curve

While it’s true that some real-time rendering programs are more intuitive and user-friendly than others, the technology nevertheless comes with a learning curve that some may find daunting. This is especially true of standalone real-time rendering programs, as it requires the user to learn entirely new software that they may not have any prior experience working with.

Even real-time rendering plugins like Enscape that are compatible with most modeling and design applications come with new features that may take time for some to get used to. Effectively mastering the software can sometimes mean additional training.

Expensive System Requirements

Initial cost is often the main criticism of real-time rendering programs. Real-time rendering requires extremely powerful hardware and optimized hardware to run, which can be costly to purchase if a firm isn’t already using such equipment. Emerging cloud-based real-time rendering solutions may offer a more affordable solution by reducing these equipment costs, but still require firms to pay a subscription for the service.

Real-time rendering programs that use ray tracing rather than rasterization also require a more advanced graphics card—such as the NVIDIA GeForce GTX 900 series or AMD Radeon RX 400 series—than what most BIM software requires, further adding to equipment expenses.

A Leader in Real-Time Rendering

Enscape is a real-time rendering plugin designed with architects and designers in mind. Rendering courtesy of Enscape

Architects and other AEC professionals looking to employ real-time rendering in their projects have a myriad of choices to choose from when it comes to software, programs, and plugins—so many, in fact, that it can be difficult to parse out which will be the most beneficial. Fortunately there’s one real-time rendering program designed especially for architects: Enscape.

Enscape is a real-time rendering and virtual reality tool that plugs directly into the modeling software an architectural firm already uses. Enscape is compatible with some of the most popular BIM and CAD programs, including ArchiCAD, Revit, Rhinoceros, SketchUp, and Vectorworks.

Some features offered by Enscape include:

- Real-time walkthroughs

- Virtual reality integration

- Collaborative annotations

- Material library with 392 materials

- Fine-tuned material editor

- Expansive 3D asset library

- Atmospheric settings

- Composition and lighting tools

- Variety of export options

Enscape is currently used by a wide range of architectural and design firms around the world and has helped improve projects of all kinds. When Intelligent City, a technology-enabled housing company headquartered in Vancouver, Canada, created Platforms for Life—a technology platform that helps design and build sustainable mid-to-high-rise mixed-use urban housing developments—they turned to Enscape to help clients visualize their projects in 3D.

“We were looking for a way to visualize the buildings quickly. If we couldn’t keep up the iterations of the generated designs, then we wouldn’t be able to visualize them properly for our clients. We needed something fast, and Enscape met our requirements,” Timo Tsui, Intelligent City’s computational design architect, previously told gb&dPRO.

All in all, Enscape is one of the best real-time rendering programs currently available to architects, greatly streamlining the visualization process and reducing the learning curve by easily integrating into a firm’s existing BIM or CAD software. To learn more about Enscape, visit their website here.